3 Microdata

3.1 Introduction

There is a strong, widespread and increasing demand for NSIs to release Microdata Files (MF), that is, data sets containing for each respondent the score on a number of variables. Microdata files are samples generated from business or social surveys or from the Census or originate from administrative sources. It is in the interest of users to make the microdata as detailed as possible but this interest conflicts with the obligation that NSIs have to protect the confidentiality of the information provided by the respondent.

In Section 1.1 two definitions of disclosure were provided: re-identification disclosure and attribute disclosure. In the microdata setting the re-identification disclosure concept is used as we are releasing information at individual level. When releasing microdata, an NSI must assess the risk of re-identifying statistical units and disclosing confidential information. There are different options available to NSIs for managing these disclosure risks, namely applying statistical disclosure control techniques, restricting access or a combination of the two.

Applying SDC methods leads to a loss of information and statistical content and affects the inferences that users are able to make on the data. The goal for an effective statistical disclosure control strategy is to choose optimum SDC techniques which maximize the utility of the data while minimizing the disclosure risk. On the other hand, the user of protected microdata should obtain most important information on the expected information loss due to SDC process. It enables the assessment of the impact of changes in the original data resulting from the need to protect statistical confidentiality on the quality of the final results of the estimates and analyzes carried out by him/her.

In general, two types of microdata files are released by NSIs, namely public use files (PUF) and research use files (MFR). The disclosure risk in public use files is entirely managed by the design of the file and the application of SDC methods. For research use microdata files SDC methods will be applied in addition to some restrictions on access and use, e.g. under a licence or access agreement, such as those provided by Commission Regulation 831/2202, see Section 2.3. Necessarily the research release files contain more detail than the public use files. The estimated expected information loss should be computed both as total and for each variable separately, if possible.

Some NSIs will also provide access to microdata in datalaboratories/research centres or via remote access/execution. Datalabs allow approved users on-site access to more identifiable microdata. Typically datalab users are legally prohibited from disclosing information and are subject to various stringent controls, e.g. close supervision on-site to protect the security of the data and output checking, to assist with disclosure control. For remote execution researchers are provided with a full description of the microdata. They then send prepared scripts to the NSI who run the analysis, check and return the results. Remote access is a secure on-line facility where the researchers connect to the NSI’s server (via passwords and other security devices) where the data and programs are located. The researchers can submit code for analysis of microdata or in some instances see the files and programs ‘virtually’ on their desktops. Confidentiality protection is by a combination of microdata modification, automatic checks on output requested, manual auditing of output and a contractual agreement. The researcher does not have complete access to the whole data itself; however they may have access to a small amount of unit record information for the purpose of seeing the data structure before carrying out their analysis.

Section 3.2 goes through the whole process of creating a microdata file for external users from the original microdata. The aim of such section is to briefly analyse the different stages of the disclosure process providing references to the relevant sections where each step will be described in more details. Section 3.6 is dedicated to the software. Sections 3.7 and 3.8 provide some examples. Further and more detailed examples can be found in Case studies available on the CASC website (https://research.cbs.nl/casc/Handbook.htm#casestudies). Chapter 6 of the current handbook provides more details on different microdata access issues such as research centres, remote access/execution and licensing.

3.2 A roadmap to the release of a microdata file

This section aims at introducing the reader to the process that, starting from the original microdata file as it is produced by survey specialists, ends with the creation of a file for external users. This roadmap will drive you through the six stage process for disclosure, mostly outlined in Section 1.2 i.e.

- why is confidentiality protection needed;

- what are the key characteristics and use of the data;

- disclosure risk (ex ante);

- disclosure control methods;

- implementation;

- assessment of disclosure risk and utility (ex post)

Specifying, for each stage, the peculiarities of microdata release. In Table 3.1 we present an overview of the process.

| Stage of disclosure process | Analyses to be carried out / problem to be addressed ⇓ Results expected |

| 1. Why is confidentiality protection needed | Does the data refer to individuals or legal entity? ⇓ We need to protect the statistical unit |

| 2. What are the key characteristics and use of the data | Analysis of the type/structure of the data ⇓ Clear vision of which units need protections |

| Analysis of survey methodology ⇓ Type of sampling frame, sample/complete enumeration of strata, further analysis of survey methodology, calibration |

|

| Analysis of NSI objectives ⇓ Type of release (PUF, MFR),dissemination policies, peculiarities of the phenomenon, coherence between multiple releases (PUF and MFR), coherence with released tables and on-line databases, etc. |

|

| Analysis of user needs ⇓ Priorities for variables, type of analysis, etc. |

|

| Analysis of the questionnaire ⇓ List of variables to be removed, variables to be included, some ideas of level of details of structural variables |

|

| 3. Disclosure risk (ex ante) | Disclosure scenario ⇓ List of identifying variables |

| Definition of risk ⇓ Risk measure |

|

| Risk assessment ⇓ If the risk is deemed too high need of disclosure limitation methods |

|

| 4. Disclosure limitation methods | Analysis of type of data involved, NSI policies and users needs ⇓ Identification of a disclosure limitation method |

| Information loss analysis | |

| 5. Implementation | Choice of software, parameters and thresholds for different methods |

| 6. assessment of disclosure risk and utility (ex post) | Ex post analysis of disclosure risk and information loss ⇓ In case disclosure risk and/or utility loss is too high, return to step 4 or 5. |

The idea is to identify for each stage of the process choices that have to be made, analyses that need to be done, problems that need to be addressed and methods to be selected. References to the relevant sections where technical topics are discussed in detail will help the beginners in following the process without getting lost in too technical aspects.

We now analyse in turn each of the six stages.

3.2.1 Need of confidentiality protection

The starting point deals with the need of confidentiality protection which is at the base of any release of microdata. If the microdata do not refer to legal entity or individual persons it can be released without confidentiality protection: an example is the amount of rain fall in a region. If microdata pertain only of public variables, in most cases they might be released: the legislation usually treats such data as excluded from statistical confidentiality. However, in general, data refer to individual or enterprises and contains confidential variables (health related data, income, turnover, expenses, etc.) and therefore need to be protected.

3.2.2 Characteristics and uses of microdata

Of course different levels of protection are needed for different type of users. This theme leads us to the second stage of the process i.e. the study of the key uses and characteristics of the data. Here the initial question is whether the microdata file we are going to release is intended for a general public (public use file) or whether it is created for research purpose (research use files). In the latter case the microdata will be released according to predefined procedures and legal binding (see also Section 6.5). The difference in user’s type implies different user’s needs, different disclosure scenarios, different types of analyses we expect to be performed with the released data, different statistics we may intend to preserve and different amount of protection we intend to apply. We now analyse all these issues in terms.

Type and structure of data

Analysis of user needs involves first a study of the survey information content. This should be done together with a survey expert that has a deeper knowledge of the data, phenomenon and possible types of analysis that can be performed on the data.

Typical questions that need to be addressed are:

Which statistical units are involved in the survey? Individuals, enterprises, households, etc. The type of units has a big influence on the risk assessment stage.

Do data present a particular structure? Hierarchical data: students inside schools, graduates inside universities, employees inside an enterprise, individual inside household etc. If this is the case care needs to be taken in checking both levels/types of units involved. E.g., do schools/universities/enterprises need to be protected besides students/graduates/employees?

What type of sampling design has been used? Are there strata (or units of earlier stages in a two- or multistage sampling design) which have been censured? Of course a complete enumeration of a strata (typical in business surveys) implies different and higher risks than a sample. Is two- or multistage sampling used with different types of units in the different stages?

An analysis of the questionnaire is useful to analyse the type of information present in the file: possible identifying variables (of which identifiers and quasi-identifiers), confidential variables and sensitive variables.

Preliminary work on variables

In this stage the setting of objectives from the viewpoint of the NSI and the user are defined. From the NSI side dissemination policies are clarified (e.g. level of dissemination of NACE, geography, etc. or coherence with published tables). From the user point of view a list of priorities in the structural variables of the survey, requests for minimum level of details for such variables and type of analysis to be performed (ratios, weighted totals, regressions, etc).

The characteristics of the phenomenon under study should also be considered as well as the dissemination policy of the Statistical Institute. This is particularly true for example in business data where some NACE classifications may never be released by their own, but always aggregated with others. Such a-priori aggregations generally depend on the economic structure of the country. It is not a sampling or dissemination problem, but rather a feature of the surveyed phenomenon. This will bring to aggregation of categories of some identifying variables deemed too detailed.

The output of this questionnaire analysis should be a preliminary list of variables to be removed and those to be released (because relevant to users need) together with some ideas of their level of details (depending on whether we are releasing a public use file or a research use file). Some examples to clarify these ideas. Variables that shouldn’t be released comprise variables used as internal checks (e.g. some paradata), flags for imputation, variables that were not validated, variables deemed as not useful because containing too many missing values, information on the design stratum from which the unit comes from etc. Obviously also direct identifiers should not be released. The case studies A1 and A2 on microdata release provide examples of such stage.

Categories of identifying variables with too significant identifying power are commonly aggregated into a single category.

This is particularly true when releasing public use files as certain variables when too detailed could retain a level of “sensitivity”. This may not be felt useful and/or appropriate for the general public. For example, in an household expenditure survey we might avoid releasing for the public use file very detailed information on the expenditure for housing (mortgage, rent) or detailed information on the age of the house or its number of rooms (when this is very high) as these might be considered as giving too much information for particular outlying cases.

Geography

Another example is related to the level of geographical details that maybe different for a public use file or a research use file (especially if a data limitation technique is used). This happens because geographical information is a strongly identifying variable. Moreover, the geographical information collected from the respondent may be available in different variables for different purposes (place of birth, place of residence, place of work, place of study, commuting, etc.). All such geographical details need to be coherent/consistent throughout the file. To this end it may be convenient releasing relative information instead of absolute one: for example place of residence can be given at a certain detail (e.g. region) and then the other geographical information (place of work, study etc.) can be released with respect to this one. Examples of possible relative recodings (e.g. with respect to region of residence) are: region of work same as region of residence, different region but same macroregion, different macroregion.

Coherence with published tables

At this initial stage of the analysis information should be collected on what has already been published/what it is going to be released from the microdata set: dissemination plan, which type of tables and what classification/aggregation was used for the variables. This is to avoid different classifications in different release: the geographical breakdown, as well as classification of other variables in the survey (e.g. age, type of work etc.), should be coherent with the published marginals. For example, if a certain classification of the variable age is published in a table the microdata file should use a classification which has compatible break points so that to avoid gaining information by differencing. Release of date of birth is highly discouraged. Also, as far as possible, published totals should be preserved for transparency.

3.2.3 Disclosure risk (ex ante)

Moreover, in case of multiple release of the same survey (e.g. PUF and microdata for research) coherence should be maintained also between different released files in the sense that releasing different files at the same time shouldn’t allow the gaining of more information than for one file alone (see, Trottini et al., 2006). The principles apply also to the release of longitudinal or panel microdata, where the differences between records pertaining to the same case in different waves will reflect ‘events’ that have occurred to that case, as well as the attributes of the individuals.

Once the characteristics and uses of the survey data are clear, it is time to start the real analysis of the disclosure risk in relation to files with originally collected data – ex ante assessment (in one of next subsections we will indicated also on a necessity of making ex post assessment of disclosure risk to verify effciency of used SDC methods). This implies first a definition of possible situations at risk (disclosure scenarios) and second a proper definition of the ‘risk’ in order to quantify the phenomenon (risk assessment).

Disclosure scenario

A disclosure scenario is the definition of realistic assumptions about what an intruder might know about respondents and what information would be available to him to match against the microdata to be released and potentially make an identification and disclosure.

Again different types of releases may require different disclosure scenarios and different definitions of risk. For example the nosy neighbourhood scenario described in Section 3.3.2, possibly with knowledge of the presence of the respondent in the sample (implying that sample uniques are a relevant quantity of interest for risk definition), may be deemed adequate for a public use file. A different trust might be put in a researcher that needs to perform an analysis for research purposes. This implies, as a minimum step, a higher level of acceptable risk and a different scenario the spontaneous identification scenario.

Spontaneous recognition

Spontaneous recognition is possible when the researchers unintentionally recognize some units. For example, when releasing enterprise microdata, it is publicly known that the largest enterprises are generally included in the microdata file because of their significant impact on the studied phenomenon. Moreover, the largest enterprises are also the most identifiable ones as recognisable by all (the largest car producer factory, the national mail delivery enterprise, etc.). Consequently, a spontaneous identification or recognition might occur. A description of different scenarios is presented in Section 3.3.2; examples of spontaneous identification scenarios for MFR are reported in case studies A1 and A2.

Definition of risk

From the adopted scenario we can extract the list of identifying variables i.e. the variables that may allow the identification of a unit. These will be the basis for defining the risk of disclosure. Intuitively, a unit is at risk of identification when it cannot be confused with several other units. The difficulty is to express this simple concept using sound statistical methodology.

Different approaches are used if the identifying variables are categorical of continuous. In the former case at the basis of the definition is the concept of a ‘key’ (i.e. the combination of categories of the identifying variables): see Section 3.3.1 for a classification of different definitions. Whereas if continuous identifying variables are present in the file a possibility is to use the concept of density: see Ichim (2009) for a detailed analysis of definitions of risk in the case of continuous variables. Of course, the problem is even more complicated when we deal with a mixture of categorical and numerical key variables; for an example of this situation (quite common in enterprise microdata) see case study A1 (Community Innovation Survey). Another solution in this context can be the assessment of disclosure risk based on the (expected) number of units for which a value of the given continuous variable falls into the respectively defined (using established threshold of deviation) neighborhood of a given observation. This approach can be perceived as some variation of \(k\)-anonymity in this case.

Risk assessment

Once a formal definition of risk has been chosen we need to measure/estimate it. There are several possibilities for categorical identifying variables (these are reported in various subsections of Section 3.3) and for a mixture of categorical and continuous identifying variable we have already mentioned Case study A1. The final step of the risk assessment is the definition of a threshold to define when a unit or a file presents an acceptable risk and when, on the contrary, it has to be considered at risk. This threshold depends of course on the type of measure adopted and details on how to choose a threshold are reported in the relevant subsequent sections.

Choice of scenarios and level of acceptable risk are extremely dependent on different cultural situations in different member states, different policies applied by different institutes, different approaches to statistical analysis, different perceived risk. To this end it must be stressed that different countries may have extremely different situation/phenomenon therefore different scenarios and risk methods are indeed necessary.

Currently there is no general agreement on which risk methodology is best although different methods give in general similar answers for the extreme cases. However, as already stated in Section 3.3.1, there is a strong need to further compare and understand differences between available methods. Pros and cons of each method are described in the relevant sections may be used as a guidelines for the most appropriate choice of the risk estimation in different situations. Further advice can be gained by studying of the examples and case studies.

3.2.4 SDC-methods

If the risk assessment stage shows that the disclosure risk is high then the application of statistical disclosure limitation methods is necessary to produce a microdata file for external users.

Masking methods

Microdata protection methods can generate a protected microdata set either by masking original data, i.e. generating a modified version of the original microdata set or by generating synthetic data that preserve some statistical properties of the original data. Synthetic data are still difficult to implement; a description can be found in Section 3.4.7. Masking methods are divided into two categories depending on their effect on the original data (Willenborg and De Waal, 2001): perturbative and non perturbative masking methods.

Perturbative methods either modify the identifying variables or modify the confidential variables before publication. In the former way, unique combinations of scores of identifying variables in the original dataset may disappear and new unique combinations may appear in the perturbed dataset. In this way a user cannot be certain of an identification. Alternatively confidential variables can be modified; in this case even if an identification occurs, the wrong value is associated and disclosure of the original value is avoided (for an example of this case see case study A2). For a description of a variety of perturbative methods see sections 3.4.2, 3.4.4, 3.4.5 and 3.4.6.

Non-perturbative methods do not alter the values of the variables (either identifying or confidential); rather, they produce a reduction of detail in the original dataset. Examples of non-perturbative masking are presented in Section 3.4.3.

The choice between a data reduction and a data perturbation method strongly depends on the policy of an institute and on the type of data/survey to be released. While the policy of an institute is outside of this debate, technical reasons may suggest the use of perturbative methods for the protection of continuous variables (mainly business data). Analysis of information loss should always be part of the selection process. The usual difference between types of release remains valid and it is linked to the difference between users needs. Again the examples and the case studies A1 and A2 may help in clarifying different situations.

User needs and types of protection

From the needs of the users and the types of analyses that could be performed on the data one could gain information for the choice of the type of protection that could be applied to the microdata. Also users could express priorities in the need of maintaining some variables intact (e.g., for business usually NACE is the most important variable, then employees, and so on).

Information loss

For research purposes maybe we could be interested in maintaining the possibility of being able to reproduce the published tables. For a public use file maybe we could avoid, as much as possible, the use of local suppression as this may render data analysis difficult for non sophisticated users. In general, the implementation of perturbative methods should take into account what variables and relationships among them need to be kept from the user point of view. An assessment of information loss caused by the protection methods adopted is highly recommended. A brief description of information loss measures is reported in Section 3.5; examples of how to check in practice the amount of distortion or modification in the protected microdata is presented in case studies A1 and A2.

Finally, every time a data perturbation method is applied attention should be placed at relationships between different types of release (PUF, MFR, tables) so as to avoid as much as possible, different marginal totals from different sources.

An example of the application of this reasoning for the definition of a dissemination strategy can be found, for example, in Trottini et al. (2006).

3.2.5 Implementation

The next stage of the SDC process is the implementation of the whole procedure, choice of software, parameters and levels of acceptable risks.

Documentation is an essential part of any dissemination strategy both for auditing from external authorities and transparency towards users. The former may include description of legal and administrative steps for a risk management policy together with the technical solution applied. The latter is essential for a user to understand what has been changed or limited in the data because of confidentiality constraints. If a data perturbation method has been applied then, for transparency reasons, this should be clearly stated. Information on which statistics have been preserved and which have been modified and some order of magnitude of possible changes should be provided as far as possible. If a data reduction method has been applied with some local suppression then the distribution of such suppressions should be given for a series of different dimensions of interest (distribution by variables, by household size, household type, etc.) and any other statistics that are deemed relevant for the user. The released microdata should be obviously accompanied by all necessary metadata and information on methodologies used at various stage of the survey process (sampling, imputation, validation, etc.) together with information on magnitude of sampling errors, estimation domains etc.

3.2.6 Ex post assessment of disclosure risk and information loss

The last - but, of course, not least - stage of the procedure is the ex post assessment of disclosure risk and computation of the expected information loss due to SDC. The ex post risk assessment (usually made using the same measures as in the case of ex ante assessement, for comparability) allows for confirmation whether the used procedure eliminates or sufficiently reduces the threat of unit identification or not. If not, a modification of used methods (e.g. by changing some tools, modification of parameters, etc.) should be made. This means back to either the step “Disclosure limitation methods” or the step “Implementation”.

An assessment of information loss caused by the applied protection methods is highly recommended. The knowledge of possible loss of information is key for data utility for possible users. If the information loss is too great then the used methods or their parameterization should be changed (coming back to step “Disclosure limitation methods”). One should remain that simultaneously the disclosure risk should be also as small as possible. Thus, these quantities should be harmonized. A detailed description of information loss measures is reported in Section 3.5; examples of how to check in practice the amount of distortion or modification in the protected microdata is presented in case studies A1 and A2.

Of course, the results of computation of discloure risk (both final and indirect, if applicable) and information loss should be saved in the documentation of the whole process. However, whereas the values of measures of disclosure risk are confidential and known only by entitled staff of the data holder, the level of expected information loss should be made available to the user. It is a very important factor influencing the quality of the final analysis results obtained by him/her.

3.3 Risk assessment

3.3.1 Overview

Microdata has many analytical advantages over aggregated data, but also poses more serious disclosure issues because of the many variables that are disseminated in one file. For microdata, disclosure occurs when there is a possibility that an individual can be re-identified by an intruder using information contained in the file, and when on the basis of that, confidential information is obtained. Microdata are released only after taking out directly identifying variables, such as names, addresses, and identity numbers. However, other variables in the microdata can be used as indirect identifying variables. For individual microdata this are variables such as gender, age, occupation, place of residence, country of birth, family structure, etc. and for business microdata variables such as economic activity, number of employees, etc. These (indirect) identifying variables are mainly publicly available variables or variables that are present in public databases such as registers.

If the identifying variables are categorical then the compounding (cross-classification) of these variables defines a key. The disclosure risk is a function of such identifying variables/keys either in the sample alone or in both the sample and the population.

To assess the disclosure risk, we first need to make realistic assumptions about what an intruder might know about respondents and what information will be available to him to match against the microdata and potentially make an identification and disclosure. These assumptions are known as disclosure risk scenarios and more details and examples are provided in the next section of this handbook. Based on the disclosure risk scenario, the identifying variables are determined. The other variables in the file are confidential or sensitive variables and represent the data not to be disclosed. NSIs usually view all non-publicly available variables as confidential/sensitive variables regardless of their specific content, though there can be some variables, e.g. sexual identity, health conditions, income, that can be more sensitive.

In order to undertake a risk assessment of microdata, NSIs might rely on ad-hoc methods, experience and checklists based on assessing the detail and availability of identifying variables. There is a clear need for obtaining quantitative and objective disclosure risk measures for the risk of re-identification in the microdata. For microdata containing censuses or registers, the disclosure risk is known as we have all identifying variables available for the whole population. However, for microdata containing samples the population base is unknown or partially known through marginal distributions. Therefore, probabilistic modelling or heuristics are used to estimate disclosure risk measures at population level, based on the information available in the sample. This section provides an overview of methods and tools that are available in order to estimate quantitative disclosure risk measures.

Intuitively, a unit is at risk if we are able to single it out from the rest. The idea at the base of the definition of risk is a way to measure rareness of a unit either in the sample or in the population.

When the identifying variables are categorical (as it is usually the case in social surveys) the risk is cast in terms of the cells of the contingency table built by cross-tabulating the identifying variables: the keys. Consequently all the records in the same cell have the same value of the risk.

A classification of risk measures

Several definitions of risk have been proposed in the literature; here we focus mainly on those for which tools are available to compute/estimate them easily. We can broadly classify disclosure risk measures into three types: risk measures based on keys in the sample, those based on keys in the population and that make use of statistical models or heuristics to estimate the quantities of interest and those based on the theory of record linkage. Whereas the first two classes are devoted to risk assessment for categorical identifying variables the third one may be used for categorical and continuous variables.

Risk based on keys in the sample

For the first class of risk measures a unit is at risk if its combination of scores on the identifying variables is below a given threshold. The threshold rule used within the software package \(\mu\)‑ARGUS is an example of this class of risk measures.

Risk based on keys in the population

For the second type of approach we are concerned with the risk of a unit as determined by its combination of scores on the identifying variables within the population or its probability of re-identification. The idea then is that a unit is at risk if such quantity is above a given threshold. Because the frequency in the population is generally unknown, it may be estimated through a modelling process. Examples of this reasoning are the individual risk of disclosure based on the Negative Binomial distribution developed by Benedetti and Franconi (1998) and Franconi and Polettini (2004), which is outlined in Section 3.3.5, and the one based on the Poisson distribution and log-linear models developed by Skinner and Holmes (1998) and Elamir and Skinner (2004) which is described in Section 3.3.6 along with current research on other probabilistic methods. Another approach based on keys in the population is the Special Uniques Detection (SUDA) Algorithm developed by Elliot et al. (2002) that uses a heuristic method to estimate the risk; this is outlined in Section 3.3.7.

Risk based on record linkage

When identifying variables are continuous we cannot exploit the concept of rareness of the keys and we transform such concept into rareness in the neighbourhood of the record. A way to measure rareness in the neighbourhood is through record linkage techniques. This third class of disclosure risk is covered in Section 3.3.8.

Section 3.3.2 provides an introduction to disclosure risk scenarios and Section 3.3.3 introduces concepts and notation used throughout this chapter. Sections to 3.3.8 describe different approaches to microdata risk assessment as specified above. However, as microdata risk assessment is a novelty in statistical research there isn’t yet agreement on what method is the best, or at least best under given circumstances. In the following sections we comment on various approaches to risk measures and try to give advice on situations where they could or could not be applied. In any case, it has been recognised that research should be undertaken to evaluate these different approaches to microdata risk assessment, see for example Shlomo and Barton (2006).

The focus of these methods and this section of the handbook is for microdata samples from social surveys. For microdata samples from censuses or registers the disclosure risk is known. Business survey microdata are not typically released due to their disclosive nature (skewed distributions and very high sampling fractions).

In Section 3.7 we make some suggestions on practical implementation and in Section 3.8 we give examples of real data sets and ways in which risk assessment could be carried out.

3.3.2 Disclosure risk scenarios

The definition of a disclosure scenario is a first step towards the development of a strategy for producing a “safe” microdata file (MF). A scenario synthetically describes (i) which is the information potentially available to the intruder, and (ii) how the intruder would use such information to identify an individual i.e. the intruder’s attack means and strategy. Often, defining more than one scenario might be convenient, because different sources of information might be alternatively or simultaneously available to the intruder. Moreover, re-identification risk can be assessed keeping into account different scenarios at the same time.

We refer to the information available to the intruder as an External Archive (EA), where information is provided at individual level, jointly with directly identifying data, such as name, surname, etc. The disclosure scenario is based on the assumption that the EA available to the intruder is an individual microdata archive. That is, for each individual directly identifying variables, and some other variables are available. Some of these further variables are assumed to be available also in the MF that we want to protect. The intruder’s strategy of attack would be to use this overlapping information to match direct identifier to a record in the MF. The matching variables are then the identifying variables.

We consider two different types of re-identification, spontaneous recognition and re-identification via record matching (or linkage) according to the information we assume to be available to the intruder. In the first case we consider that the intruder might rely on personal knowledge about one or a few target individuals, and spontaneously recognize a surveyed individual (Nosy Neighbour scenario). In such a case the External Archive contains one (or a few) records relative to detailed personal information. In the second case, we assume that the intruder (who might be an MF user) has access to a public register and that he or she tries to match the information provided by this EA, with that provided by the MF, in order to identify surveyed units. In such a case, the intruder’s chance of identifying a unit depends on the EA main characteristics, such as completeness, accuracy and data classification. Broadly speaking, we assume that the intruder has a lower chance of correctly identifying an individual when the information provided by the EA is not update, complete, accurate, or is classified according to standards different by those used in the statistical survey.

Moreover, as far as statistical disclosure control is concerned, experts are used to distinguish between social and economic microdata (without loss of generality we can consider respectively individuals and enterprises). In fact, the concept of disclosure risk is mainly based on the idea of rareness with respect to a set of identifying variables. For social survey microdata, because of the characteristics of the population under investigation and the nature of the data collected, identifying variables are mainly (or exclusively) categorical. For much of the information collected on enterprises however the identifying variables often take the form of quantitative variables with asymmetric distributions (Willenborg and de Waal, 2001). Disclosure scenarios are then described according to this statement.

The case study part of the Handbook contains examples of the Nosy Neighbour scenario and the EA scenario for social survey data. The issues involved with hierarchical and longitudinal data are also addressed. Finally, scenarios for business survey data are discussed.

In any case the definition of the scenario is essential as it defines the hypothesis underneath the risk estimation and the subsequent protection of the data.

3.3.3 Concepts and notation

For microdata, disclosure risk measures quantify the risk of re-identification. Individual per record disclosure risk measures are useful for identifying high-risk records and targeting the SDC methods. These individual risk measures can be aggregated to obtain global file level disclosure risk measures. These global risk measures are particularly useful to NSIs for their decision making process on whether the microdata is safe to be released and allows comparisons across different files.

Microdata disclosure

Disclosure in a microdata context means a correct record re-identification operation that is achieved by an intruder when comparing a target individual in a sample with an available list of units (external file) that contains individual identifiers such as name and address plus a set of identifying variables. Re-identification occurs when the unit in the released file and a unit in the external file belong to the same individual in the population. The underlying hypothesis is that the intruder will always try to match a unit in the sample \(s\) to be released and a unit in the external file using the identifying variables only. In addition, it is likely that the intruder will be interested in identifying those sample units that are unique on the identifying variables. A re-identification occurs when, based on a comparison of scores on the identifying variables, a unit \(i^*\) in the external file is selected as matching to a unit \(i\) in the sample and this link is correct and therefore confidential information about the individual is disclosed using the direct identifiers.

To define the disclosure scenario, the following assumptions are made. Most of them are conservative and contribute to the definition of a worst case scenario:

- a sample \(s\) from a population \(\mathcal{P}\) is to be released, and sampling design weights are available;

- the external file available to the intruder covers the whole population \(\mathcal{P}\); consequently for each \(i \in s\) the matching unit \(i^*\) does always exist in \(\mathcal{P}\);

- the external file available to the intruder contains the individual direct identifiers and a set of categorical identifying variables that are also present in the sample;

- the intruder tries to match a unit \(i\) in the sample with a unit \(i^*\) in the population register by comparing the values of the identifying variables in the two files;

- the intruder has no extra information other than that contained in the external file;

- a re-identification occurs when a link between a sample unit \(i\) and a population unit \(i^*\) is established and \(i^*\) is actually the individual of the population from which the sampled unit \(i\) was derived; e.g. the match has to be a correct match before an identification takes place.

Moreover we add the following assumptions:

- the intruder tries to match all the records in the sample with a record in the external file;

- the identifying variables agree on correct matches, that is no errors, missing values or time-changes occur in recording the identifying variables in the two microdata file.

Notation

The following notation is introduced here and used throughout the chapter when describing different methods for estimating the disclosure risk of microdata.

Suppose the key has \(K\) cells and each cell \(k = 1, \ldots, K\) is the cross-product of the categories of the identifying variables. In general, we will be looking at a contingency table spanned by the identifying variables in the microdata and not a single vector. The contingency table contains the sample counts and is typically very large and very sparse. Let the population size in cell \(k\) of the key be \(F_k\) and the sample size \(f_k\). Also:

\[ \sum_{k = 1}^{K}F_{k} = N,\quad \sum_{k = 1}^{K}f_{k} = n. \]

Formally the sample and population sizes in the models introduced in Section 3.3.5 and 3.3.6 are random and their expectations are denoted by \(n\) and \(N\) respectively. In practice, the sample and population size are usually replaced by their natural estimators; the actual sample and population sizes, assumed to be known.

Observing the values of the key on individual \(i \in s\) will classify such individual into one cell. We denote by \(k(i)\) the index of the cell into which individual \(i \in s\) is classified based on the values of the key.

According to the concept of re-identification disclosure given above, we define the (base) individual risk of disclosure of unit \(i\) in the sample as its probability of re-identification under the worst case scenario. Therefore the risk \(r_i\) that we get is certainly not smaller than the actual risk, the individual risk is a conservative estimate of the actual risk:

\[ r_{i}=\mathbb{P}\left( i \text{ correctly linked with } i^* \mid s , \mathcal{P} \text{, worst case scenario }\right) \tag{3.1}\]

All of the methods based on keys in the population described in this chapter aim to estimate this individual per-record disclosure risk measure that can be formulated as \(1/F_k\). The population frequencies \(F_k\) are unknown parameters and therefore need to be estimated from the sample. A global file-level disclosure risk measure can be calculated by aggregating the individual disclosure risk measures over the sample:

\[ \tau_{1} = \sum\limits_{k}^{}\frac{1}{F_{k}} \]

An alternative global risk measure can be calculated by aggregating the individual disclosure risk measures over the sample uniques of the cross-classified identifying variables. Since the uniques in the population \(F_k = 1\), are the dominant factor in the disclosure risk measure, we focus our attention on sample uniques \(f_k = 1\):

\[ \tau_{2} = \sum\limits_{k}^{}{I(f_{k} = 1)\frac{1}{F_{k}}} \]

where \(I\) represents an indicator function obtaining the value 1 if \(f_k = 1\) or 0 if not.

Both of these global risk measures can also be presented as rates by dividing by \(n\), the sample size or the number of uniques.

We assume that the \(f_k\) are observed but the \(F_k\) are not observed.

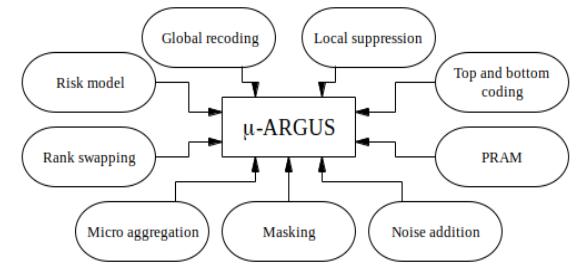

3.3.4 ARGUS threshold rule

The ARGUS threshold rule is based on easily applicable rules and views of safety/unsafety of microdata that is used at Statistics Netherlands. The implementation of these rules was the main reason to start the development of the software package \(\mu\)‑ARGUS.

In a disclosure scenario, keys a combination of identifying variables, are supposed to be used by an intruder to re-identify a respondent. Re-identification of a respondent can occur when this respondent is rare in the population with respect to a certain key value, i.e. a combination of values of identifying variables. Hence, rarity of respondents in the population with respect to certain key values should be avoided. When a respondent appears to be rare in the population with respect to a key value, then disclosure control measures should be taken to protect this respondent against re-identification.

Following the Nosy Neighbour scenario, the aim of the \(\mu\)‑ARGUS threshold rule is to avoid the occurrence of combinations of scores that are rare in the population and not only avoiding population-uniques. To define what is meant by rare the data protector has to choose a threshold value for each key. If a key occurs more often than this threshold the key is considered safe, otherwise the key must be protected because of the risk of re-identification.

The level of the threshold and the number and size of the keys to be inspected depend of course on the level of protection you want to achieve. Public use files require much more protection than microdata files under contract that are only available to researchers under a contract. How this rule is used in practice is given in the example of Section 3.7.

If a key is considered unsafe according to this rule, protection is required. Therefore often global recoding and local suppression are applied. These techniques are described in the sections 3.4.3.2 and 3.4.3.4.

3.3.5 ARGUS individual risk methodology

If a distinction between units rare in the sample from a unit rare in the population wants to be made then an inferential step may be followed. In the initial proposal by Benedetti and Franconi (1998), further developed in Franconi and Polettini (2004) and implemented in \(\mu\)‑ARGUS, the uncertainty on \(F_k\) is accounted for in a Bayesian fashion by introducing the distribution of the population frequencies given the sample frequencies. The individual risk of disclosure is then measured as the (posterior) mean of \(\frac{1}{F_k}\) with respect to the distribution of \(F_k|f_k\):

\[ r_{i} = \mathbb{E} \left( \frac{1}{F_{k}} \mid f_{k} \right) = \sum\limits_{h\geq f_{k}} \frac{1}{h} \mathbb{P} \left(F_{k} = h \mid f_{k} \right). \tag{3.2}\]

where the posterior distribution of \(F_k|f_k\) is negative binomial with success probability \(p_k\) and number of successes \(f_k\). As the risk is a function of \(f_k\) and \(p_k\) its estimate can be obtained by estimating \(p_k\). Benedetti and Franconi (1998) propose to use

\[ {\hat{p}}_{k} = \frac{f_{k}}{\sum\limits_{i:k(i)=k}^{}w_{i}} \tag{3.3}\]

where \(\sum\limits_{i:k(i)=k}^{}w_{i}\) is an estimate of \(F_k\) based on the sampling design weights \(w_i\), possibly calibrated (Deville and Särndal, 1992).

When is it possible to apply the individual risk estimation

The procedure relies on the assumption that the available data are a sample from a larger population. If the sampling weights are not available, or if data represent the whole population, the strategy used to estimate the individual risk is not meaningful.

In the \(\mu\)‑ARGUS manual (see e.g. Hundepool et al., 2014) a fully detailed description of the approach is reported. This brief note is based on Polettini (2004).

Assessing the risk for the whole file

The individual risk provides a measure of risk at the individual level. A global measure of disclosure risk for the whole file can be expressed in terms of the expected number of re-identifications in the file. The expected number of re-identifications is a measure of disclosure that depends on the number of records. For this reason, \(\mu\)‑ARGUS evaluates also the re‑identification rate that is independent of \(n\):

\[ \xi = \frac{1}{n}\sum\limits_{k=1}^{K}{f_{k}r_{k}} \quad . \]

\(\xi\) provides a measure of global risk, i.e. a measure of disclosure risk for the whole file, which does not depend on the sample size and can be used to assess the risk of the file or to compare different types of release; for the mathematical details see Polettini (2004).

The percentage of expected re-identifications, i.e. the value \(\psi=100\cdot\xi\%\) provides an equivalent measure of global risk.

Application of local suppression within the individual risk methodology

After the risk has been estimated, protection takes place. One option in protection is the application of local suppression (see Section 3.4.3.4).

In \(\mu\)‑ARGUS the technique of local suppression, when combined with the individual risk, is applied only to unsafe cells or combinations. Therefore, the user must input a threshold in terms of risk, e.g. probability of re-identification, to classify these as either safe or unsafe. Local suppression is applied to the unsafe individuals, so as to lower their probability of being re‑identified under the given threshold.

In order to select the risk threshold, that represents a level of acceptable risk, i.e. a risk value under which an individual can be considered safe, the re‑identification rate can be used. A release will be considered safe when the expected rate of correct re-identifications is below a level the NSI considers acceptable. As the re-identification rate is cast in terms of the individual risk, a threshold on the re-identification rate can be transformed into a threshold on the individual risk (see below). Under this approach, individuals are at risk because their probability of re-identification contributes a large proportion of expected re-identifications in the file.

In order to reduce the number of local suppressions, the procedure of releasing a safe file considers preliminary steps of protection using techniques such as global recoding (see Section 3.4.3.2). Recoding of selected variables will indeed lower the individual risk and therefore the re-identification rate of the file.

Threshold setting using the re-identification rate

Consider the re-identification rate \(\xi\): a key \(k\) contributes to \(\xi\) an amount \(r_kf_k\) of expected re‑identifications. Since units belonging to the same key \(k\) have the same individual risk, keys can be arranged in increasing order of risk \(r_k\). Let the subscript (\(k\)) denotes the \(k\)-th element in this ordering. A threshold \(r^*\) on the individual risk can be set. Consequently, unsafe cells are those for which \(r_{k} \geq r^*\) that can be indexed by \((k) = k^{*} + 1,\ldots,K\). The key \(k^{*}\) is in a one-to-one correspondence to \(r^{*}\). This allows setting an upper bound \(\xi^{*}\) on the re‑identification rate of the released file (after data protection) substituting \(r_kf_k\) with \(r^{*}f_{(k)}\) for each (\(k\)). For the mathematical details see Polettini (2004) and the Argus manual (e.g. Hundepool et al., 2014).

The approach pursued so far can be reversed. Therefore, selecting a threshold \(\tau\) on the re-identification rate \(\xi\) determines a key index \(k^{*}\) which corresponds to a value for \(r^{*}\). Using \(r^{*}\) as a threshold for the individual risk keeps the re‑identification rate \(\xi\) of the released file below \(\tau\). The search of such a \(k^{*}\) is performed by a simple iterative algorithm.

Releasing hierarchical files

A relevant characteristic of social microdata is its inherent hierarchical structure, which allows us to recognise groups of individuals in the file, the most typical case being the household. When defining the re-identification risk, it is important to take into account this dependence among units: indeed re-identification of an individual in the group may affect the probability of disclosure of all its members. So far, implementation of a hierarchical risk has been performed only with reference to households, i.e. a household risk.

Allowing for dependence in estimating the risk enables us to attain a higher level of safety than when merely considering the case of independence.

The household risk

The household risk makes use of the same framework defined for the individual risk. In particular, the concept of re-identification holds with the additional assumption that the intruder attempts a confidentiality breach by re-identification of individuals in households.

The household risk is defined as the probability that at least one individual in the household is re-identified. For a given household \(g\) of size \(|g|\), whose members are labelled \(i_1, \ldots, i_{|g|}\), the household risk is:

\[ r^{h}(g) = \mathbb{P} \left(i_{1} \cup i_{2} \cup \ldots \cup i_{|g|} \text { re-identified } \right) \]

and is the same for all the individuals in household \(g\) and equals \(r_{g}^{h}\).

Threshold setting for the household risk

Since all the individuals in a given household have the same household risk, the expected number of re‑identified records in household \(g\) equals \(|g|r_{g}^{h}\). The re‑identification rate in a hierarchical file can be then defined as \(\xi^{h} = \frac{1}{n}\sum\limits_{g=1}^{G}{|g|r_{g}^{h}}\), where \(G\) is the total number of households in the file. The re‑identification rate can be used to define a threshold \(r^{h^{\ast}}\) on the household risk \(r^{h}\), much in the same way as for the individual risk. For the mathematical details see Polettini (2004) and the Argus manual (e.g. Hundepool et al., 2014).

Note that the household risk \(r_{g}^{h}\) of household \(g\) is computed by the individual risks of its household members. For a given household, it might happen that a household is unsafe (\(r_{g}^{h}\) exceeds the threshold) because just one of its members, \(i\), say, has a high value \(r_{i}\) of the individual risk. To protect the households, the followed approach is therefore to protect individuals in households, first protecting those individuals who contribute most to the household risk. For this reason, inside unsafe households, detection of unsafe individuals is needed. In other words, the threshold on the household risk \(r^{h}\) has to be transformed into a threshold on the individual risk \(r_{i}\). To this aim, it can be noticed that the household risk is bounded by the sum of the individual risks of the members of the household: \(r_{g}^{h} \leq \sum\limits_{j=1}^{|g|}r_{i_{j}}\).

Consider to apply a threshold \(r^{h^{\ast}}\) on the household risk. In order for household \(g\) to be classified safe (i.e. \(r_{g}^{h} < r^{h^{\ast}}\)) it is sufficient that all of its components have individual risk less than \(\delta_{g} = r^{h ^{\ast}}/|g|\).

This is clearly an approach possibly leading to overprotection, as we check whether a bound on the household risk is below a given threshold.

It is important to remark that the threshold \(\delta_g\) just defined depends on the size of the household to which individual \(i\) belongs. This implies that for two individuals that are classified in the same key \(k\) (and therefore have the same individual risk \(r_{k}\)), but belong to different households with different sizes, it might happen that one is classified safe, while the other unsafe (unless the household size is included in the set of identifying variables).

In practice, denoting by \(g(i)\) the household to which record \(i\) belongs, the approach pursued so far consists in turning a threshold \(r^{h^{\ast}}\) on the household risk into a vector of thresholds on the individual risks \(r_{i} = 1,\ldots,n\):

\[ \delta_{g} = \delta_{g(i)} = \frac{r^{h^{\ast}}}{|g(i)|} \quad . \]

Individuals are finally set to unsafe whenever \(r_{i} \geq \delta_{g(i)}\); local suppression is then applied to those records, if requested. Suppression of these records ensures that after protection the household risk is below the threshold \(\delta_{g}\).

Choice of identifying variables in hierarchical files

For household data it is important to include in the identifying variables that are used to estimate the household risks also the available information on the household, such as the number of components or the household type.

Suppose one computes the risk using the household size as the only identifying variable in a household data file, and that such file contains households whose risk is above a fixed threshold. Since information on the number of components in the household cannot be removed from a file with household structure, these records cannot be safely released, and no suppression can make them safe. This permits to check for presence of very peculiar households (usually, the very large ones) that can be easily recognised in the population just by their size and whose main characteristic, namely their size, can be immediately computed from the file. For a discussion on this issue see Polettini (2004).

3.3.6 The Poisson model with log-linear modelling

As defined in Skinner and Elamir (2004), assuming that the \(F_{k}\) are independently Poisson distributed with means \(\left\{\lambda_{k} \right\}\) and assuming a Bernoulli sampling scheme with equal selection probably \(\pi\), then \(f_{k}\) and \(F_{k} - f_{k}\) are independently Poisson distributed as: \(f_{k} \mid \lambda_{k} \sim \operatorname{Pois} \left(\pi\lambda_{k} \right)\) and \(F_{k} - f_{k} \mid \lambda_{k} \sim \operatorname{Pois} \left( ( 1 - \pi ) \lambda_{k} \right)\) . The individual risk measure for a sample unique is defined as \(r_{k} = \mathbb{E}_{\lambda_{k}} \left( \frac{1}{F_{k}} \mid f_{k} = 1 \right)\) which is equal to:

\[ r_{k} = \frac{1}{\lambda_{k} (1 - \pi) } \left[ 1 - e^{ - \lambda_{k} (1 - \pi) } \right] \]

In this approach the parameters \(\left\{ \lambda_{k} \right\}\) are estimated by taking into account the structure and dependencies in the data through log-linear modelling. Assuming that the sample frequencies \(f_{k}\) are independently Poisson distributed with a mean of \(u_{k} = \pi\lambda_{k}\), a log-linear model for the \(u_{k}\) can be expressed as: \(\text{log}(u_{k}) = x_{k}^{'}\beta\) where \(x_{k}\) is a design vector denoting the main effects and interactions of the model for the key variables. Using standard procedures, such as iterative proportional fitting, we obtain the Poisson maximum-likelihood estimates for the vector \(\beta\) and calculate the fitted values: \({\hat{u}}_{k} = \text{exp}(x_{k}^{'}\hat{\beta})\). The estimate for \({\hat{\lambda}}_{k}\) is equal to \(\frac{{\hat{u}}_{k}}{\pi}\) which is substituted for \(\lambda_{k}\) in the above formula for \(r_{k}\). The individual disclosure risk measures can be aggregated to obtain a global (file-level) measure:

\[ {\hat{\tau}}_{2} = \sum\limits_{k \in \text{SU}}^{}{\hat{r_k} =}\sum\limits_{k \in \text{SU}}^{}{\frac{1}{{\hat{\lambda}}_{k}(1 - \pi)}\lbrack 1 - e^{- {\hat{\lambda}}_{k}(1 - \pi)}\rbrack} \]

where \(\text{SU}\) is the set of all sample uniques.

More details on this method are available from Skinner and Shlomo (2005, 2006) and Shlomo and Barton (2006).

Skinner and Shlomo (2005, 2006) have developed goodness-of-fit criteria for selecting the most robust log-linear model that will provide accurate estimates for the global disclosure risk measure detailed above. The method begins with a log-linear model where a high test statistic indicates under-fitting (i.e., the disclosure risk measures will be over-estimated). Then a forward search algorithm is employed by gradually adding in higher order interaction terms into the model until the test statistic approaches the level (based on a Normal distribution approximation) where the fit of the log-linear model is accepted.

This method is still under development. At present there is a need to develop clear and user-friendly software to implement the method. However, the Office for National Statistics in the UK has used it to inform microdata release decisions. The method is based on theoretical well-defined disclosure risk measures and goodness of fit criteria which ensure the fit of the log-linear model and the accuracy of the disclosure risk measures. It requires a model search algorithm which takes some computer time and requires intervention.

New methods for probabilistic risk assessment are under development based on a generalized Negative Binomial smoothing model for sample disclosure risk estimation which subsumes both the model used in \(\mu\)‑ARGUS and the Poisson log-linear model above. The method is useful for key variables that are ordinal where local neighbourhoods can be defined for inference on cell \(k\). The Bayesian assumption of \(\lambda_{k} \sim \text{Gamma}(\alpha_{k},\beta_{k})\) is added independently to the Poisson model above which then transforms the marginal distribution to the generalized Negative Binomial Distribution: \[ f_{k} \sim \text{NB}(\alpha_{k},p_{k} = \frac{1}{1 + \text{N}\pi_{k}\beta_{k}}) \] and

\[ F_{k}|f_{k} \sim \text{NB}(\alpha_{k} + f_{k},\rho_{k} = \frac{1 + \text{N}\pi_{k}\beta_{k}}{1 + \text{N}\beta_{k}}) \]

where \(\pi_{k}\) is the sampling fraction. In each local neighbourhood of cell k a smoothing polynomial regression model is carried out to estimate \(\alpha_{k}\) and \(\beta_{k}\), and disclosure risk measures are estimated based on the Negative Binomial Distribution, \({\hat{\tau}}_{2} = \sum_{k \in \text{SU}}^{}{\hat{r_k} =}\sum_{k \in \text{SU}}^{}\frac{{\hat{\rho}}_{k}(1 - {\hat{\rho}}_{k})^{{\hat{\alpha}}_{k}}}{{\hat{\alpha}}_{k}(1 - {\hat{\rho}}_{k})}\) , see: Rinott and Shlomo (2005, 2006).

3.3.7 SUDA

The Special Uniques Detection Algorithm (SUDA) (Elliot et.al., 2005) is a software system (windows application available as freeware under restricted licence) that provides disclosure risk broken down by record, variable, variable value and by interactions of those. It is based on the concept of a “special unique”. A special unique is a record that is a sample unique on a set of variables and that is also unique on a subset of those variables. Empirical work has shown that special uniques are more likely to be population unique than random uniques. Special uniques can be classified according to the size and number of the smallest subset of key variables that defines the record as unique, known as minimal sample uniques (MSU). In the algorithm, all MSUs are found for each record on all possible subsets of the key variables where the maximum size of the subsets m is specified by the user.

SUDA grades and orders records within a microdata file according to the level of risk. The method assigns a per record matching probability to a sample unique based on the number and size of minimal uniques. The DIS Measure (Skinner and Elliot, 2000) is the conditional probability of a correct match given a unique match:

\[ p(cm \mid um) = \frac{\sum\limits_{k = 1}^{K} I\left(f_{k} = 1 \right)}{\sum\limits_{k = 1}^{K} F_{k} I \left(f_{k} = 1 \right) } \]

and is estimated by a simple sample-based measure which is approximately unbiased without modelling assumptions. Elliot (2005) describes a heuristic which combines the DIS measure with scores resulting from the algorithm (i.e., SUDA scores). This method known as DIS-SUDA produces estimates of intruder confidence in a match against a given record being correct. This is closely related to the probability that the match is correct and is heuristically linked to the estimate of

\[ \tau_2 = \sum\limits_k{I(f_k=1)\frac{1}{F_k}} \]

The advantage of this method is that it relates to a practical model of data intrusion, and it is possible to compare different values directly. The disadvantages are that it is sensitive to level of the max MSU parameter and is calculated in a heuristic manner. In addition it is difficult to compare disclosure risk across different files. However, the method has been extensively tested and was used successfully for the detection of high-risk records in the UK Sample of Anonymized Records (SAR) drawn from the 2001 Census (Merrett, 2004). The assessment showed that the DIS-SUDA measure calculated from the algorithm provided a good estimate for the individual disclosure risk measure, especially for the case when the number of key variables, \(m = 6\). The algorithm also identifies the variables and value of variables that are contributing most to the disclosure risk of the record.

A new algorithm, SUDA2 has been developed, Elliot et al (2005), that improves SUDA using several methods. The development provides a much faster tool that can handle larger datasets.

3.3.8 Record Linkage

Roughly speaking, record linkage consists of linking each record \(a\) in file \(A\) (protected file) to a record \(b\) in file \(B\) (original file). The pair \((a,b)\) is a match if \(b\) turns out to be the original record corresponding to \(a\).

To apply this method to measure the risk of identity disclosure, it is assumed that an intruder has got an external dataset sharing some (key or outcome) variables with the released protected dataset and containing additionally some identifier variables (e.g. passport number, full name, etc.). The intruder is assumed to try to link the protected dataset with the external dataset using the shared variables. The number of matches gives an estimation of the number of protected records whose respondent can be re-identified by the intruder. Accordingly, disclosure risk is defined as the proportion of matches among the total number of records in \(A\).

The main types of record linkage used to measure identity disclosure in SDC are discussed below. An illustrative example can be found on the CASC-website as one of the case-studies linked to this handbook (see https://research.cbs.nl/casc/Handbook.htm#casestudies).

3.3.8.1 Distance-based record linkage

Distance-based record linkage consists of linking each record \(a\) in file \(A\) to its nearest record \(b\) in file \(B\). Therefore, this method requires a definition of a distance function for expressing nearness between records. This record-level distance can be constructed from distance functions defined at the level of variables. Construction of record-level distances requires standardizing variables to avoid scaling problems and assigning each variable a weight on the record-level distance.

Distance-based record linkage was first proposed in Pagliuca and Seri (1999) to assess the disclosure risk after microaggregation, see Section 3.4.2.3. Those authors used the Euclidean distance and equal weights for all variables. (Domingo-Ferrer and Torra, 2001) later used distance-based record linkage for evaluating other masking methods as well; in their empirical work, distance-based record linkage outperforms probabilistic record linkage (described below). Recently, (Torra and Miyamoto, 2004) have shown that method-specific distance functions might be defined to increase the proportion of matches for particular SDC methods.

The record linkage algorithm introduced in (Bacher, Brand and Bender, 2002) is similar in spirit to distance-based record linkage. This is so because it is based on cluster analysis and, therefore, links records that are near to each other.

The main advantages of using distances for record linkage are simplicity for the implementer and intuitiveness for the user. Another strong point is that subjective information (about individuals or variables) can be included in the re-identification process by properly modifying distances. In fact, the next version of the \(\mu\)‑ARGUS microdata protection package (e.g. Hundepool et al., 2014) will incorporate distance-based record linkage as a disclosure risk assessment method.

The main difficulty of distance-based record linkage consists of coming up with appropriate distances for the variables under consideration. For one thing, the weight of each variable must be decided and this decision is often not obvious. Choosing a suitable distance is also especially thorny in the cases of categorical variables and of masking methods such as local recoding where the masked file contains new labels with respect to the original dataset.

3.3.8.2 Probabilistic record linkage

Like distance-based record linkage, probabilistic record linkage aims at linking pairs of records \((a,b)\) in datasets \(A\) and \(B\), respectively. For each pair, an index is computed. Then, two thresholds \(LT\) and \(NLT\) in the index range are used to label the pair as linked, clerical or non-linked pair: if the index is above \(LT\), the pair is linked; if it is below \(NLT\), the pair is non-linked; a clerical pair is one that cannot be automatically classified as linked or non-linked and requires human inspection. When independence between variables is assumed, the index can be computed from the following conditional probabilities for each variable: the probability \(\mathbb{P}\left( 1\mid M \right)\) of coincidence between the values of the variable in two records \(a\) and \(b\) given that these records are a real match, and the probability \(\mathbb{P}\left( 0\mid U \right)\)of non-coincidence between the values of the variable given that \(a\) and \(b\) are a real unmatch.

Like in the previous section, disclosure risk is defined as the number of matches (linked pairs that are correctly linked) over the number of records in file \(A\).

To use probabilistic record linkage in an effective way, we need to set the thresholds \(LT\) and \(NLT\) and estimate the conditional probabilities \(\mathbb{P}\left( 1 \mid M \right)\) and \(\mathbb{P}\left( 0 \mid U \right)\) used in the computation of the indices. In plain words, thresholds are computed from: (i) the probability \(\mathbb{P}\left( \text{LP} \mid U \right)\) of linking a pair that is an unmatched pair (a false positive or false linkage) and (ii) the probability \(\mathbb{P}\left( \text{NP} \mid M \right)\)of not linking a pair that is a match (a false negative or false unlinkage). Conditional probabilities \(\mathbb{P}\left( 1 \mid M \right)\) and \(\mathbb{P}\left( 0 \mid U \right)\) are usually estimated using the EM algorithm (Dempster, Laird and Rubin 1977).

Original descriptions of this kind of record linkage can be found in Fellegi and Sunter (1969) and Jaro (1989). Torra and Domingo-Ferrer (2003) describe the method in detail (with examples) and Winkler (1993) presents a review of the state of the art on probabilistic record linkage. In particular, this latter paper includes a discussion concerning non-independent variables. A (hierarchical) graphical model has recently been proposed (Ravikumar and Cohen, 2004) that compares favourably with previous approaches.

Probabilistic record linkage methods are less simple than distance-based ones. However, they do not require rescaling or weighting of variables. The user only needs to provide two probabilities as input: the maximum acceptable probability \(\mathbb{P}\left( \text{LP} \mid U \right)\) of false positive and the maximum acceptable probability \(\mathbb{P}\left( \text{NP} \mid M \right)\) of false negative.

3.3.8.3 Other record linkage methods

Recently, the use of other record linkage methods has also been considered for disclosure risk assessment. While in the previous record linkage methods it is assumed that the two files to be linked share a set of variables, other methods have been developed where this constraint is relaxed. Under appropriate conditions, (Torra, 2004) shows that re-identification is still possible when files do not share any variables. Domingo-Ferrer and Torra (2003) propose the use of such methods for disclosure risk assessment.

3.3.9 References

Bacher J., Brand R., and Bender S. (2002), Re-identifying register data by survey data using cluster analysis: an empirical study. International Journal of Uncertainty, Fuzziness and Knowledge Based Systems, 10(5):589–607, 2002.

Benedetti, R. and Franconi, L. (1998). Statistical and technological solutions for controlled data dissemination, Pre-proceedings of New Techniques and Technologies for Statistics, 1, 225-232.

Coppola, L. and Seri, G. (2005). Confidentiality aspects of household panel survey: the case study of Italian sample from EU-SILC. Monographs of official statistics – Proceedings of the Work session on statistical data confidentiality – Geneve 9-11 November 2005, 175-180.

Cox, L.H. (1995). Protecting confidentiality in business surveys. Business Survey Methods, Cox, B.G., Binder, D.A., Chinnappa, B.N., Christianson, A., Colledge, M.J. e Kott, P.S. (Eds.), New-York: Wiley, 443‑476.

Dempster A. P., Laird N. M., and Rubin D. B. (1977), Maximum likelihood from incomplete data via the em algorithm. Journal of the Royal Statistical Society, 39:1–38, 1977.

Deville, J.C. and Särndal, C.E. (1992). Calibration estimators in survey sampling, Journal of the American Statistical Association 87, 367–382.

Domingo-Ferrer J., and Torra, V. (2001), A quantitative comparison of disclosure control methods for microdata. In P. Doyle, J. I. Lane, J. J. M. Theeuwes, and L. Zayatz, editors, Confidentiality, Disclosure and Data Access: Theory and Practical Applications for Statistical Agencies, pages 111–134, Amsterdam, 2001. North-Holland. https://crises-deim.urv.cat/webCrises/publications/bcpi/cliatpasa01Aquantitative.pdf.

Domingo-Ferrer, J., and Torra, V. (2003), Disclosure risk assessment in statistical microdata protection via advanced record linkage. Statistics and Computing, 13:343–354.

Elamir, E., Skinner, C. (2004) Record-level Measures of Disclosure Risk for Survey Microdata, Journal of Official Statistics, Vol. 22, No. 3, 2006, pp. 525–539. See also: Southampton Statistical Sciences Research Institute, University of Southampton, methodology working paper:

https://eprints.soton.ac.uk/8175/

Elliot, M. J., (2000). DIS: A new approach to the Measurement of Statistical Disclosure Risk. International Journal of Risk Management 2(4), pp 39-48.

Elliot, M. J., Manning, A. M.& Ford, R. W. (2002). 'A Computational Algorithm for Handling the Special Uniques Problem'. International Journal of Uncertainty, Fuzziness and Knowledge Based Systems 5(10), pp 493-509.

Elliot, M. J., Manning, A., Mayes, K., Gurd, J. & Bane, M. (2005). ’SUDA: A Program for Detecting Special Uniques’. Proceedings of the UNECE/Eurostat work session on statistical data confidentiality, Geneva, November 2005

Elliot, M. J., Skinner, C. J., and Dale, A. (1998). 'Special Uniques, Random Uniques, and Sticky Populations: Some Counterintuitive Effects of Geographical Detail on Disclosure Risk'. Research in Official Statistics 1(2), pp 53-67.

Fellegi, I. P., and Sunter, A.B. (1969), A theory for record linkage. Journal of the American Statistical Association, 64(328):1183–1210.

Franconi, L. and Polettini, S. (2004). Individual risk estimation in \(\mu\)-ARGUS: a review. In: Domingo-Ferrer, J. (Ed.), Privacy in Statistical Databases. Lecture Notes in Computer Science. Springer, 262‑272

Franconi, L. and Seri, G. (2000). Microdata Protection at the Italian National Statistical Insititute (Istat): A User Perspective. Of Significance Journal of the Association of Public Data Users – Volume 2 Number 1 2000, page. 57-64.

Hundepool, A., Van de Wetering, A., Ramaswamy, R., Franconi, L., Capobianchi, A., de Wolf, P.P., Domingo-Ferrer, J., Torra, V., Brand, R., and Giessing, S. (2014), \(\mu\)-ARGUS version 5.1 Software and User’s Manual. Statistics Netherlands, Voorburg NL, 2014. https://research.cbs.nl/casc/Software/MUmanual5.1.3.pdf.

Jackson, P., Longhurst, J. (2005), Providing access to data and making microdata safe, experiences of the ONS, proceedings of the UNECE/Eurostat work session on statistical data confidentiality, Geneva, November 2005

Jaro, M.A. (1989), Advances in record-linkage methodology as applied to matching the 1985 census of tampa, florida. Journal of the American Statistical Association, 84(406):414–420.

Pagliuca, D. and Seri, G. (1999), Some results of individual ranking method on the system of enterprise accounts annual survey, Esprit SDC Project, Deliverable MI-3/D2.

Polettini, S. and Seri, G (2004). Revision of “Guidelines for the protection of social microdata using the individual risk methodology”. Deliverable 1.2-D3, available at CASC web site.

Ravikumar, P., and Cohen, W.W. (2004),. A hierarchical graphical model for record linkage. In UAI 2004, USA, 2004. Association for Uncertainty in Artificial Intelligence.

Rinott, Y. ,Shlomo, N (2006) A Generalized Negative Binomial Smoothing Model for Sample Disclosure Risk Estimation ,. PSD'2006 Privacy in Statistical Databases, Springer LNCS proceedings, to appear.

Rinott, Y., Shlomo, N. (forthcoming) A Smoothing Model for Sample Disclosure Risk Estimation, Volume in memory of Yehuda Vardi in the IMS Lecture Notes Monograph Series.

Shlomo, N. (2006), Review of statistical disclosure control methods for census frequency tables, ONS Survey Methodology Bulletin.

Shlomo, N., Barton, J. (2006) Comparison of Methods for Estimating Disclosure Risk Measures for Microdata at the UK Office for National Statistics, PSD'2006 Privacy in Statistical Databases Conference, CD Proceedings, to appear